文章作者:Tyan

博客:noahsnail.com | CSDN | 简书

声明:作者翻译论文仅为学习,如有侵权请联系作者删除博文,谢谢!

翻译论文汇总:https://github.com/SnailTyan/deep-learning-papers-translation

SinGAN: Learning a Generative Model from a Single Natural Image

Abstract

We introduce SinGAN, an unconditional generative model that can be learned from a single natural image. Our model is trained to capture the internal distribution of patches within the image, and is then able to generate high quality, diverse samples that carry the same visual content as the image. SinGAN contains a pyramid of fully convolutional GANs, each responsible for learning the patch distribution at a different scale of the image. This allows generating new samples of arbitrary size and aspect ratio, that have significant variability, yet maintain both the global structure and the fine textures of the training image. In contrast to previous single image GAN schemes, our approach is not limited to texture images, and is not conditional (i.e. it generates samples from noise). User studies confirm that the generated samples are commonly confused to be real images. We illustrate the utility of SinGAN in a wide range of image manipulation tasks.

摘要

我们提出了SinGAN,一种可以从单张自然图像中学习到的无条件生成模型。我们的模型通过训练可以捕获图像中图像块的内部分布,然后能够生成高质量、多样性的样本,这些样本带有与训练图像相同的视觉内容。SinGAN包含一个全卷积GAN金字塔,每个GAN负责学习不同尺度图像的图像块分布。这可以生成具有任意大小和长宽比的新样本,这些样本具有显著的可变性,同时又保留了训练图像的整体结构和精细纹理。与之前的单图像GAN方案相比,我们的方法不局限于纹理图像,而且非条件的(即它从噪声中生成样本)。用户研究证明,生成的样本通常被混淆为真实图像。我们在各种图像处理任务中说明了SinGAN的实用性。

1. Introduction

Generative Adversarial Nets (GANs) [19] have made a dramatic leap in modeling high dimensional distributions of visual data. In particular, unconditional GANs have shown remarkable success in generating realistic, high quality samples when trained on class specific datasets (e.g., faces [33], bedrooms[47]). However, capturing the distribution of highly diverse datasets with multiple object classes (e.g. ImageNet [12]), is still considered a major challenge and often requires conditioning the generation on another input signal [6] or training the model for a specific task (e.g. super-resolution [30], inpainting [41], retargeting [45]).

1. 引言

生成对抗网络(GAN)[19]在建模视觉数据的高维分布方面取得了巨大飞跃。尤其是在特定类别数据集上(例如,面孔[33],卧室[47])训练的非条件GAN,在生成真实、高质量样本方面取得了显著的成功。然而,捕获具有多个对象类别(例如ImageNet[12])的高度多样化数据集分布,仍然是一项重大挑战,并且通常需要另一个输入信号[6]来调整生成或针对特定任务训练模型(例如super-resolution[30],inpainting[41],retargeting[45])。

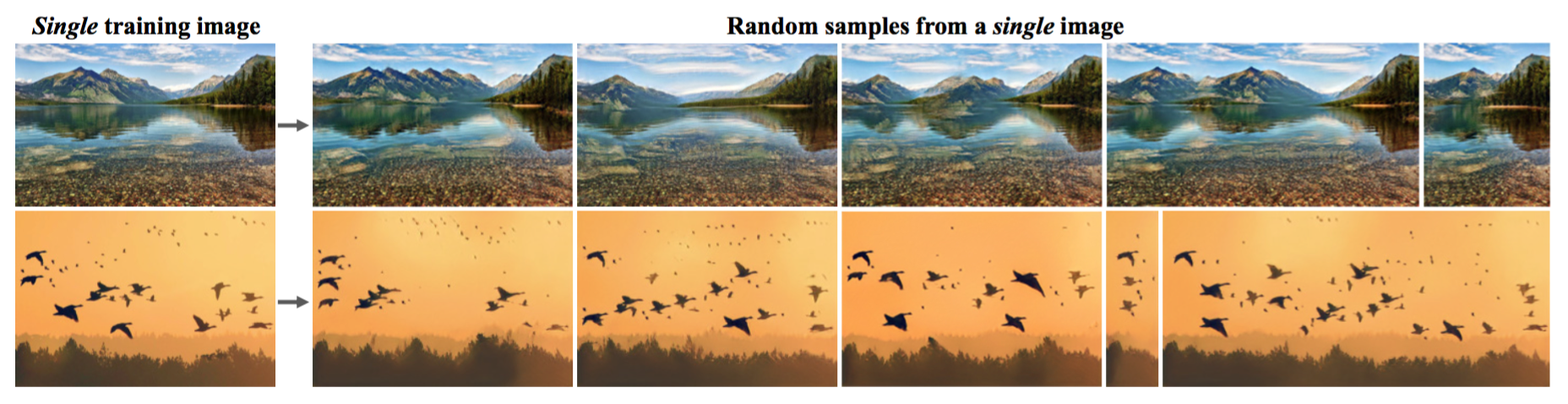

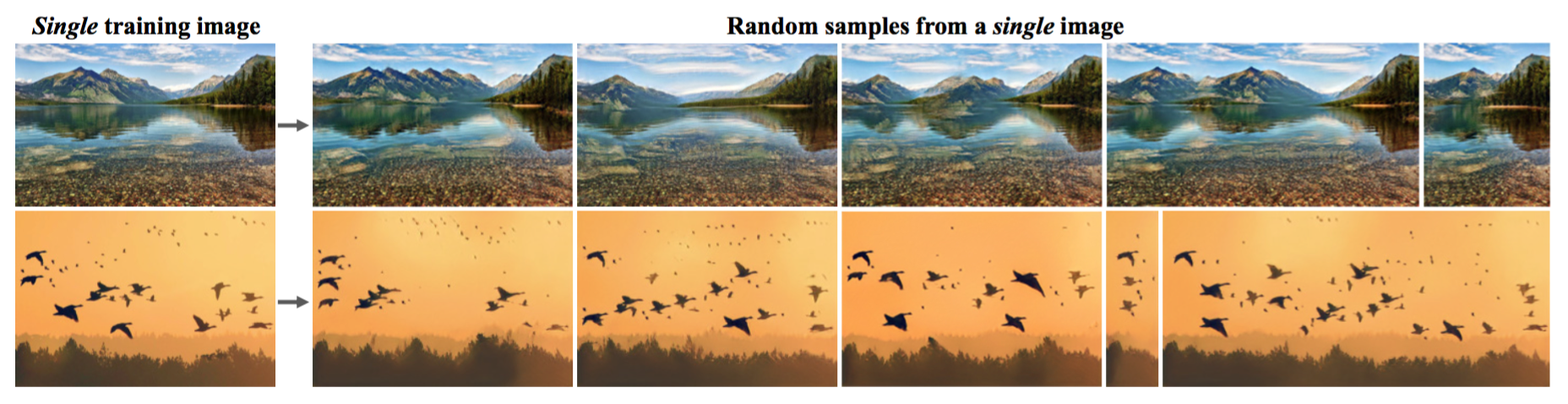

Here, we take the use of GANs into a new realm – unconditional generation learned from a single natural image. Specifically, we show that the internal statistics of patches within a single natural image typically carry enough information for learning a powerful generative model. SinGAN, our new single image generative model, allows us to deal with general natural images that contain complex structures and textures, without the need to rely on the existence of a database of images from the same class. This is achieved by a pyramid of fully convolutional light-weight GANs, each is responsible for capturing the distribution of patches at a different scale. Once trained, SinGAN can produce diverse high quality image samples (of arbitrary dimensions), which semantically resemble the training image, yet contain new object configurations and structures(Fig. 1).

Figure 1: Image generation learned from a single training image. We propose SinGAN–a new unconditional generative model trained on a single natural image. Our model learns the image’s patch statistics across multiple scales, using a dedicated multi-scale adversarial training scheme; it can then be used to generate new realistic image samples that preserve the original patch distribution while creating new object configurations and structures.

我们利用GAN进入了一个新的领域——从单张自然图像学习的非条件生成。具体而言,我们证明了单张自然图像中图像块的内部统计通常可以携带足够的信息来学习一个强大的生成模型。我们的新单张图像生成模型SinGAN,可以让我们处理包含复杂结构和纹理的一般自然图像,而不需要依赖同类别图像数据集的存在。这是通过全卷积轻量级的GAN金字塔实现的,每个GAN负责捕获不同尺度的图像块分布。训练之后,SinGAN可以生成各种高质量的图像样本(任意尺寸),这些样本在语义上与训练图像类似,但包含新的对象配置和结构(图1)。

图1:从单张训练图像学习到的图像生成。我们提出了SinGAN——一种新的在单张自然图像上训练的非条件生成模型。我们的模型在多个尺度上学习图像的图像块统计,使用专用的多尺度对抗训练方案;它可以用来生成新的真实图像样本,这些样本在保留原始图像块分布的同时创造了新的对象配置和结构。

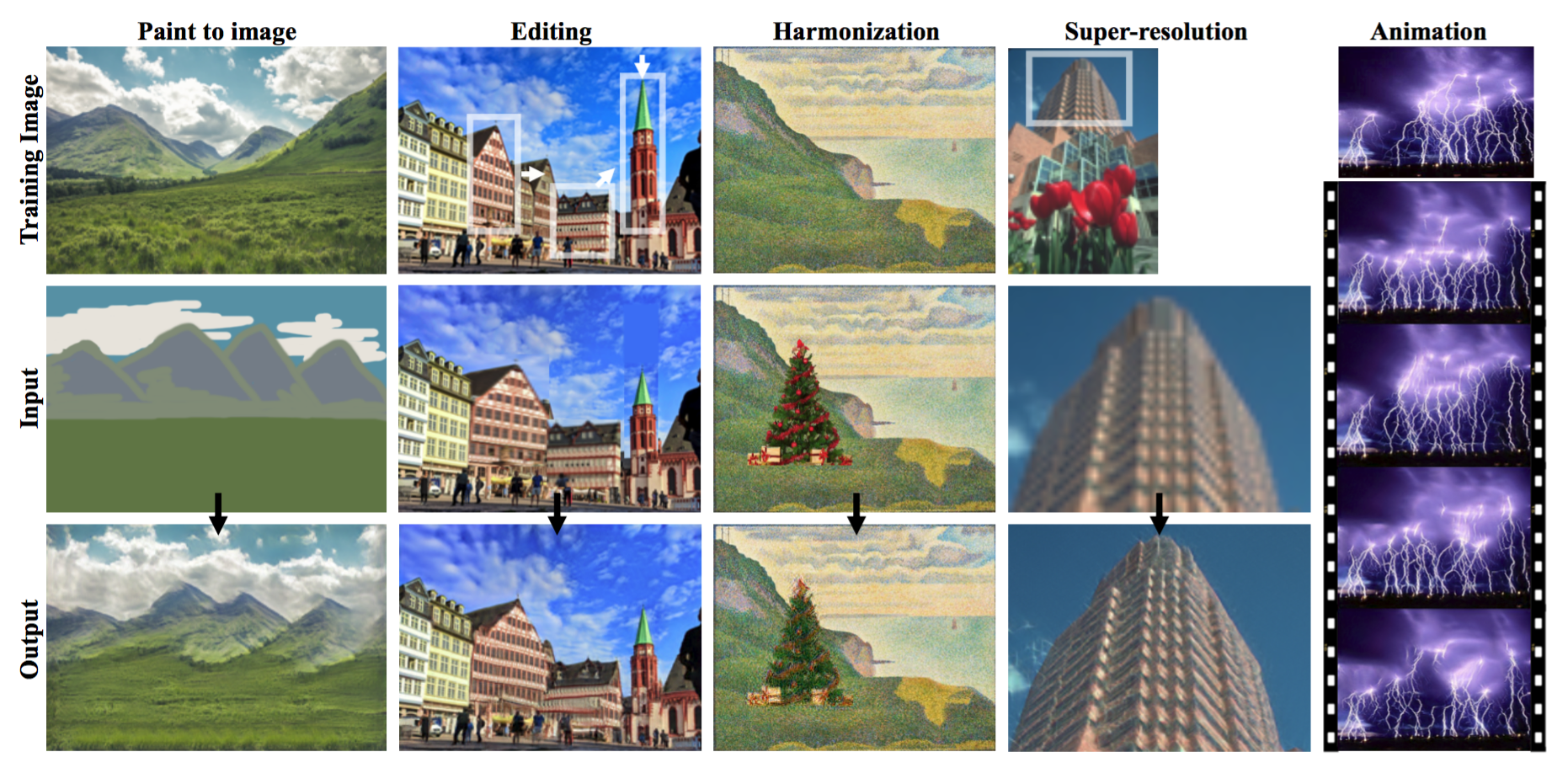

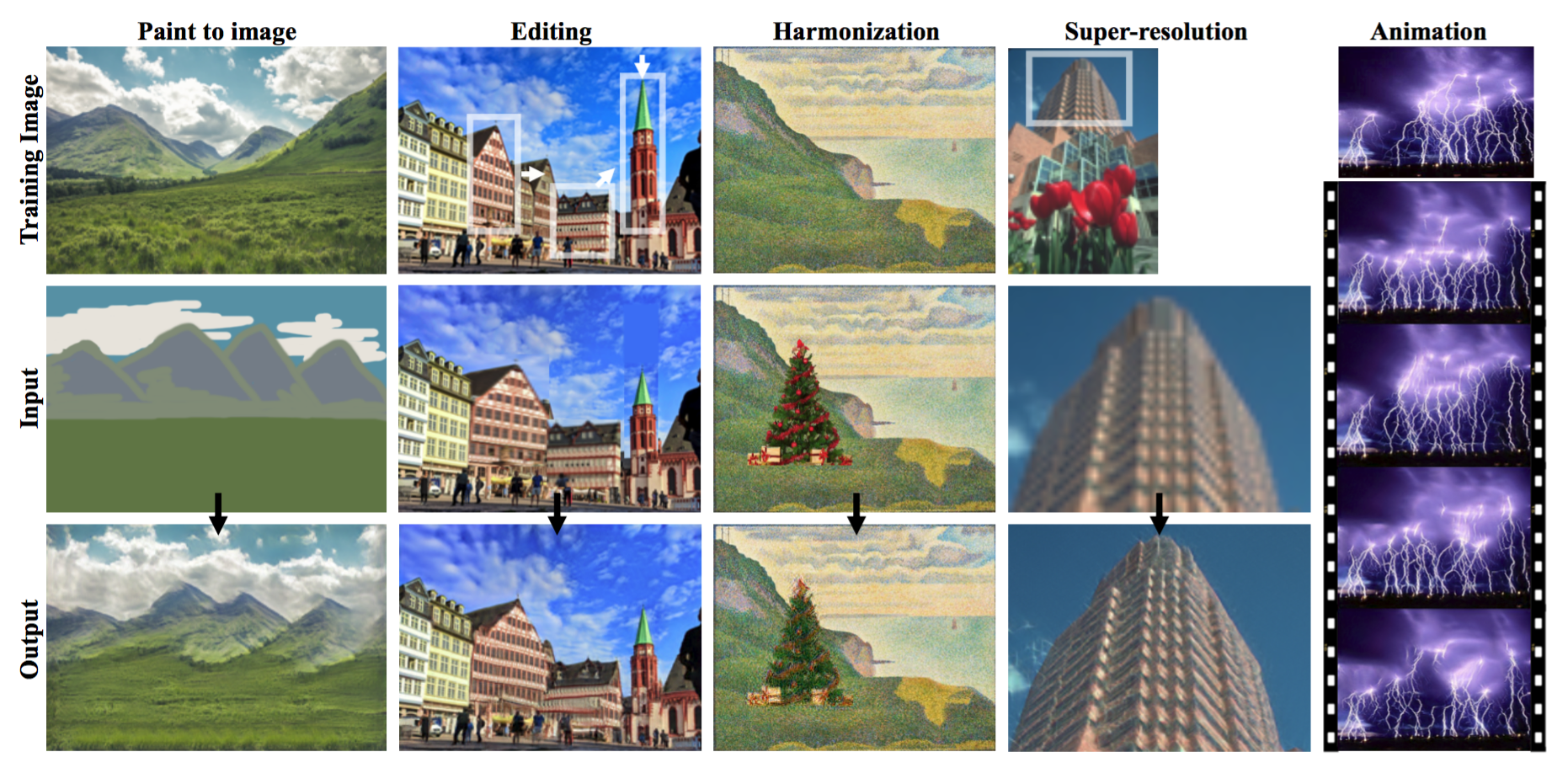

Modeling the internal distribution of patches within a single natural image has been long recognized as a powerful prior in many computer vision tasks [64]. Classical examples include denoising [65], deblurring [39], super resolution [18], dehazing [2, 15], and image editing [37, 21, 9, 11, 50]. The most closley related work in this context is [48], where a bidirectional patch similarity measure is defined and optimized to guarantee that the patches of an image after manipulation are the same as the original ones. Motivated by these works, here we show how SinGAN can be used within a simple unified learning framework to solve a variety of image manipulation tasks, including paint-to-image, editing, harmonization, superresolution, and animation from a single image. In all these cases, our model produces high quality results that preserve the internal patch statistics of the training image (see Fig. 2 and our project webpage). All tasks are achieved with the same generative network, without any additional information or further training beyond the original training image.

Figure 2: Image manipulation. SinGAN can be used in various image manipulation tasks, including: transforming a paint (clipart) into a realistic photo, rearranging and editing objects in the image, harmonizing a new object into an image, image super-resolution and creating an animation from a single input. In all these cases, our model observes only the training image (first row) and is trained in the same manner for all applications, with no architectural changes or further tuning (see Sec. 4).

长期以来,建模单张自然图像中图像块的内在分布被看做是许多计算机视觉任务中的有力先验。经典示例包括去噪[65],去模糊[39],超分辨率[18],除雾[2,15]和图像编辑[37,21,9,11,50]。在这些工作中,最密切相关的是[48],其中定义并优化了双向图像块相似性度量,用来确保处理后的图像块与原始图像块相同。受这些工作的激励,这里我们证明了SinGAN可以用在一个简单统一的学习框架内来解决一系列图像处理任务,包括图像的绘制,编辑,协调,超分辨率和从单张图像生成动画。在所有这些任务中,我们的模型都生成了高质量的结果,并保留了训练图像的内在图像块统计(图2和我们的项目网页)。所有的任务都可以通过同一个生成网络实现,而无需任何额外信息或除了原始训练图像外的进一步训练。

图2:图像处理。SinGAN可用于各种图像处理任务,包括:将绘画(剪贴画)转换为真实照片,重新排列和编辑图像中的对象,将新对象协调为图像,图像超分辨率以及从单张图像输入创建动画。在所有这些情况下,我们的模型仅观察训练图像(第一行),并且对于所有应用以相同的方式进行训练,而无需进行架构更改或进一步调整(请参见第4节)。

1.1. Related Work

Single image deep models Several recent works proposed to “overfit” a deep model to a single training example [51, 60, 46, 7, 1]. However, these methods are designed for specific tasks (e.g., super resolution [46], texture expansion [60]). Shocher et al. [44, 45] were the first to introduce an internal GAN based model for a single natural image, and illustrated it in the context of retargeting. However, their generation is conditioned on an input image (i.e., mapping images to images) and is not used to draw random samples. In contrast, our framework is purely generative (i.e. maps noise to image samples), and thus suits many different image manipulation tasks. Unconditional single image GANs have been explored only in the context of texture generation [3, 27, 31]. These models do not generate meaningful samples when trained on non-texture images (Fig. 3). Our method, on the other hand, is not restricted to texture and can handle general natural images (e.g., Fig. 1).

1.1. 相关工作

单图像深度模型最近的一些工作提出将深度模型“过拟合”单个训练样本[51,60,46,7,1]。然而,这些方法是为特定的任务(例如,超分辨率[46],纹理扩展[60])设计的。Shocher等[44,45]是第一个为单张自然图像引入以GAN为基础的内部模型的,

最近的一些工作提出将深度模型“过度拟合”为单个训练示例[51、60、46、7、1]。 但是,这些方法是为特定任务设计的。 Shocher等。 [44,45]是第一个为单个自然图像引入基于GAN的内部模型的,并在重新定位的情况下进行了说明。 但是,它们的生成取决于输入图像(即,将图像映射到图像),并且不用于绘制随机样本。 相反,我们的框架是纯粹生成的(即将噪声映射到图像样本),因此适合许多不同的图像处理任务。 仅在纹理生成的背景下研究了无条件的单图像GAN [3,27,31]。 当在非纹理图像上训练时,这些模型不会生成有意义的样本(图3)。 另一方面,我们的方法不限于纹理,并且可以处理一般的自然图像(例如,图1)。

References

[1] Yuki M Asano, Christian Rupprecht, and Andrea Vedaldi. Surprising effectiveness of few-image unsupervised feature learning. arXiv preprint arXiv:1904.13132, 2019. 2

[2] Yuval Bahat and Michal Irani. Blind dehazing using internal patch recurrence. In 2016 IEEE International Conference on Computational Photography (ICCP), pages 1–9. IEEE, 2016. 1

[3] Urs Bergmann, Nikolay Jetchev, and Roland Vollgraf. Learning texture manifolds with the periodic spatial GAN. arXiv preprint arXiv:1705.06566, 2017. 2, 4

[4] Yochai Blau, Roey Mechrez, Radu Timofte, Tomer Michaeli, and Lihi Zelnik-Manor. The 2018 pirm challenge on perceptual image super-resolution. In European Conference on Computer Vision Workshops, pages 334–355. Springer, 2018. 8

[5] Yochai Blau and Tomer Michaeli. The perception-distortion tradeoff. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6228–6237, 2018. 8

[6] Andrew Brock, Jeff Donahue, and Karen Simonyan. Large scale GAN training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096, 2018. 1

[7] Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A Efros. Everybody dance now. arXiv preprint arXiv:1808.07371, 2018. 2

[8] Wengling Chen and James Hays. Sketchygan: towards diverse and realistic sketch to image synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 9416–9425, 2018. 2

[9] Taeg Sang Cho, Moshe Butman, Shai Avidan, and William T Freeman. The patch transform and its applications to image editing. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8. IEEE, 2008. 1

[10] Tali Dekel, Chuang Gan, Dilip Krishnan, Ce Liu, and William T Freeman. Sparse, smart contours to represent and edit images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3511–3520, 2018. 2

[11] Tali Dekel, Tomer Michaeli, Michal Irani, and William T Freeman. Revealing and modifying non-local variations in a single image. ACM Transactions on Graphics (TOG), 34(6):227, 2015. 1

[12] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09, 2009. 1

[13] Emily L Denton, Soumith Chintala, Rob Fergus, et al. Deep generative image models using a laplacian pyramid of adversarial networks. In Advances in neural information processing systems, pages 1486–1494, 2015. 3

[14] Bradley Efron. Bootstrap methods: another look at the jackknife. In Breakthroughs in statistics, pages 569–593. Springer, 1992. 6

[15] Gilad Freedman and Raanan Fattal. Image and video upscaling from local self-examples. ACM Transactions on Graphics (TOG), 30(2):12, 2011. 1

[16] Leon Gatys, Alexander S Ecker, and Matthias Bethge. Texture synthesis using convolutional neural networks. In Advances in neural information processing systems, pages 262–270, 2015. 2

[17] Leon A Gatys, Alexander S Ecker, and Matthias Bethge. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2414–2423, 2016. 7, 8

[18] Daniel Glasner, Shai Bagon, and Michal Irani. Superresolution from a single image. In 2009 IEEE 12th International Conference on Computer Vision (ICCV), pages 349–356. IEEE, 2009. 1, 7

[19] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Advances in Neural Information Processing Systems, pages 2672–2680, 2014. 1

[20] Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. Improved training of wasserstein GANs. In Advances in Neural Information Processing Systems, pages 5767–5777, 2017. 4

[21] Kaiming He and Jian Sun. Statistics of patch offsets for image completion. In European Conference on Computer Vision, pages 16–29. Springer, 2012. 1

[22] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016. 4

[23] Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. GANs trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in Neural Information Processing Systems, pages 6626–6637, 2017. 5, 6

[24] Xun Huang, Yixuan Li, Omid Poursaeed, John Hopcroft, and Serge Belongie. Stacked generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5077–5086, 2017. 3

[25] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167, 2015. 4

[26] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. Image-to-image translation with conditional adversarial networks. arXiv preprint, 2017. 3, 4, 5

[27] Nikolay Jetchev, Urs Bergmann, and Roland Vollgraf. Texture synthesis with spatial generative adversarial networks. Workshop on Adversarial Training, NIPS, 2016. 2, 4

[28] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of GANs for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017. 3

[29] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. arXiv preprint arXiv:1812.04948, 2018. 3

[30] Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. Photorealistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4681–4690, 2017. 1, 7, 8

[31] Chuan Li and Michael Wand. Precomputed real-time texture synthesis with markovian generative adversarial networks. In European Conference on Computer Vision, pages 702–716. Springer, 2016. 2, 3, 4

[32] Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 136–144, 2017. 7

[33] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, pages 3730–3738, 2015. 1

[34] Fujun Luan, Sylvain Paris, Eli Shechtman, and Kavita Bala. Deep painterly harmonization. arXiv preprint arXiv:1804.03189, 2018. 8

[35] David Martin, Charless Fowlkes, Doron Tal, and Jitendra Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In null, page 416. IEEE, 2001. 4, 8

[36] Michael Mathieu, Camille Couprie, and Yann LeCun. Deep multi-scale video prediction beyond mean square error. arXiv preprint arXiv:1511.05440, 2015. 4

[37] Roey Mechrez, Eli Shechtman, and Lihi Zelnik-Manor. Saliency driven image manipulation. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 1368–1376. IEEE, 2018. 1

[38] Roey Mechrez, Itamar Talmi, and Lihi Zelnik-Manor. The contextual loss for image transformation with non-aligned data. In Proceedings of the European Conference on Computer Vision (ECCV), pages 768–783, 2018. 7, 8

[39] Tomer Michaeli and Michal Irani. Blind deblurring using internal patch recurrence. In European Conference on Computer Vision, pages 783–798. Springer, 2014. 1

[40] Anish Mittal, Rajiv Soundararajan, and Alan C Bovik. Making a completely blind image quality analyzer. IEEE Signal Processing Letters, 20(3):209–212, 2013. 7, 8

[41] Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, and Alexei A Efros. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2536–2544, 2016. 1

[42] Guim Perarnau, Joost van de Weijer, Bogdan Raducanu, and Jose M Alvarez. Invertible conditional GANs for image editing. arXiv preprint arXiv:1611.06355, 2016. 2

[43] Patsorn Sangkloy, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. Scribbler: Controlling deep image synthesis with sketch and color. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5400–5409, 2017. 2

[44] Assaf Shocher, Shai Bagon, Phillip Isola, and Michal Irani. Ingan: Capturing and remapping the “DNA” of a natural image. arXiv preprint arXiv: arXiv:1812.00231, 2018. 2

[45] Assaf Shocher, Shai Bagon, Phillip Isola, and Michal Irani. InGAN: Capturing and Remapping the “DNA” of a Natural Image. International Conference on Computer Vision (ICCV), 2019. 1, 2

[46] Assaf Shocher, Nadav Cohen, and Michal Irani. Zero-Shot Super-Resolution using Deep Internal Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3118–3126, 2018. 2, 7

[47] Nathan Silberman, Derek Hoiem, Pushmeet Kohli, and Rob Fergus. Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision, pages 746–760. Springer, 2012. 1

[48] Denis Simakov, Yaron Caspi, Eli Shechtman, and Michal Irani. Summarizing visual data using bidirectional similarity. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 1–8. IEEE, 2008. 1

[49] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1–9, 2015. 6

[50] Tal Tlusty, Tomer Michaeli, Tali Dekel, and Lihi ZelnikManor. Modifying non-local variations across multiple views. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6276–6285, 2018. 1

[51] Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. Deep image prior. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. 2, 7

[52] Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. High-resolution image synthesis and semantic manipulation with conditional GANs. arXiv preprint arXiv:1711.11585, 2017. 2, 3

[53] Xiaolong Wang and Abhinav Gupta. Generative image modeling using style and structure adversarial networks. 2016. 2

[54] Wenqi Xian, Patsorn Sangkloy, Varun Agrawal, Amit Raj, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. Texturegan: Controlling deep image synthesis with texture patches. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018. 2

[55] Xuemiao Xu, Liang Wan, Xiaopei Liu, Tien-Tsin Wong, Liansheng Wang, and Chi-Sing Leung. Animating animal motion from still. ACM Transactions on Graphics (TOG), 27(5):117, 2008. 8

[56] Jiahui Yu, Zhe Lin, Jimei Yang, Xiaohui Shen, Xin Lu, and Thomas S Huang. Generative image inpainting with contextual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5505–5514, 2018. 2

[57] Kai Zhang, Wangmeng Zuo, Yunjin Chen, Deyu Meng, and Lei Zhang. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing, 26(7):3142–3155, 2017. 4

[58] Richard Zhang, Phillip Isola, and Alexei A Efros. Colorful image colorization. In European conference on computer vision, pages 649–666. Springer, 2016. 5

[59] Bolei Zhou, Agata Lapedriza, Jianxiong Xiao, Antonio Torralba, and Aude Oliva. Learning deep features for scene recognition using places database. In Advances in neural information processing systems, pages 487–495, 2014. 4, 6

[60] Yang Zhou, Zhen Zhu, Xiang Bai, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. Non-stationary texture synthesis by adversarial expansion. arXiv preprint arXiv:1805.04487, 2018. 2

[61] Jun-Yan Zhu, Philipp Krahenbuhl, Eli Shechtman, and Alexei A Efros. Generative visual manipulation on the natural image manifold. In European Conference on Computer Vision (ECCV), pages 597–613. Springer, 2016. 2

[62] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. Unpaired image-to-image translation using cycleconsistent adversarial networks. In IEEE International Conference on Computer Vision, 2017. 2, 4

[63] Jun-Yan Zhu, Richard Zhang, Deepak Pathak, Trevor Darrell, Alexei A Efros, Oliver Wang, and Eli Shechtman. Toward multimodal image-to-image translation. In Advances in Neural Information Processing Systems, pages 465–476, 2017. 4

[64] Maria Zontak and Michal Irani. Internal statistics of a single natural image. In CVPR 2011, pages 977–984. IEEE, 2011. 1

[65] Maria Zontak, Inbar Mosseri, and Michal Irani. Separating signal from noise using patch recurrence across scales. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1195–1202, 2013. 1